Privacy by Design: Building Trust for AI-Driven Sustainability

Why AI Needs Trust to Scale Sustainability Implementation

Artificial intelligence is transforming the way organizations approach decarbonization. From optimizing energy procurement to forecasting emissions, AI enables scale and speed that manual processes simply cannot match. Agentic AI represents the frontier of AI development, and by 2028, Gartner predicts that 33% of enterprise software applications will include agentic AI, up from less than 1% in 2024.

Artificial intelligence is transforming the way organizations approach decarbonization. From optimizing energy procurement to forecasting emissions, AI enables scale and speed that manual processes simply cannot match. Agentic AI represents the frontier of AI development, and by 2028, Gartner predicts that 33% of enterprise software applications will include agentic AI, up from less than 1% in 2024.

Organizations are racing to deploy these autonomous agents that can transform data quality and tackle global sustainability challenges. But with this speed and power comes responsibility. Sensitive ESG data, regulatory complexity, and customer trust demand that innovation be balanced with rigorous governance.

At SE Advisory Services, we’ve embedded privacy by design, through data governance, into every layer of our AI-powered platform. This is the foundation of how we build our intelligent systems. Our mission is clear: enable global decarbonization while ensuring data security, transparency, and compliance.

Even above AI and automation features, data protection is a core priority for Schneider Electric. As Kevin Price, Director and Software Architect, puts it: “How we protect data and how we expose data is the most critical piece of the overall technology architecture.”

So how does Schneider Electric, the most sustainable company in the world, lead the energy technology transition securely and sustainably?

Data Privacy and Governance Take Priority in Energy AI

For sustainability leaders, ESG (Environmental, Social, and Governance) data is more than numbers—it’s strategic, confidential, and often subject to regulations like Europe’s GDPR (General Data Protection Regulation), the USA’s CCPA (California Consumer Privacy Act), and Asia’s various national laws like China’s PIPL (Personal Information Protection Law) and Japan’s APPI (Act on the Protection of Personal Information). Missteps with data can erode trust and stall progress. Common concerns from leaders include:

- Data misuse or unauthorized access

- Opaque AI decision-making

- Classification of ESG data as confidential

Because our SE Advisory Services clients are navigating complex decarbonization journeys, they need confidence that as they step into the world of Agentic AI, their data is secure and used responsibly. That’s why establishing governance that can speak to and solve these concerns from the start is essential.

How do we ensure that our clients' data is secure?

Architecting for Privacy: A Ground-Up Approach

The Schneider Electric Agentic AI software is designed with data protection as a core priority using a variety of approaches:

Cryptographic Identity Verification

A security process that uses cryptographic techniques such as digital signatures, public-key infrastructure (PKI), and encryption to confirm that an entity (person, device, or system) is who they claim to be. Every data access request is cryptographically verifiable and tied to user identity via federated identity providers.

Data Segregation Across Clients

Agents operate under the same permissions as the user they serve. For example, if a Schneider Electric Director of Data Science can access five websites, so can his AI assistant; no more, no less. Because they are constrained in a deterministic and hard-coded way, our models cannot be the victims of jailbreaking. In plain terms, you cannot trick our AI agent into seeing someone else’s data.

Anonymity and Zero Personally Identifiable Information (PII)

We do not store direct identifiers like company names or personal data. PII is any data that identifies an individual. Schneider Electric avoids using PII to protect privacy and ensure compliance. Andy Hutchison, a Schneider Electric Cybersecurity Officer, explains: “Security is data protection. We built our Agentic AI so that access is cryptographically verifiable—no shortcuts.”

Agents Built to Meet Standards and Compliance

The importance of data governance isn’t just for internal purposes; it aligns with global standards, ensuring the accuracy, security, and ethical use of customer data.

The Schneider Electric team holds to the following standards and compliance:

- ISO 42001 (AI Governance) and ISO 27001 (Security)

Schneider Electric experts use ISO 42001 and ISO 27001 principles embedded directly into the development lifecycle. ISO 42001 ensures that every AI feature is governed through documented risk assessments, human oversight, and transparent decision logs, while ISO 27001 enforces strict controls for data confidentiality, integrity, and availability. Together, we follow these standards to shape a secure, auditable environment where AI agents, like the Schneider Electric Advisory Services Agents, operate within hard-coded permissions.

- EU AI Act risk-based framework

The most stringent AI regulations were passed by the European Union as part of the EU Artificial Intelligence Act. Schneider Electric abides by those regulations and has leveraged our risk categorization framework when developing solutions.

The EU Artificial Intelligence Act establishes a risk-based framework for AI regulation. We follow these guidelines and apply their risk categorization when designing solutions. The Act classifies AI use cases into four tiers: unacceptable risk, high risk, limited risk, and minimal risk. Unacceptable risk prohibits things like social scoring systems and manipulative AI. High-risk systems involve sensitive personal data, facial recognition, or performance-critical applications—none of which is used by Schneider Electric. Limited-risk systems require transparency to ensure clients receive accurate insights for decisions such as investment opportunities, site upgrades, and PPAs. Minimal-risk systems face no significant restrictions.

Additionally, the EU AI Act keeps company and human data safe by prohibiting the following types of AI systems: AI systems that deploy subliminal, manipulative, or deceptive techniques, systems that exploit vulnerabilities, biometric categorization systems, social scoring, compiling facial recognition databases, inferring emotions in workplaces or educational institutions, and assessing the risk of an individual committing criminal offenses.

Schneider Electric is dedicated to upholding the EU AI Act by adhering to its risk-based framework, ensuring transparency, prohibiting banned practices, and implementing compliance measures for all AI-driven solutions.

- SOC 2 Type II Certification

SOC 2 Type II certification is a cornerstone of how we build Agentic AI with trust at its core. This framework validates that our security, confidentiality, and availability controls are not only designed effectively but operate consistently over time—through rigorous, independent audits. By embedding these controls into our development lifecycle, from penetration testing to continuous monitoring, we ensure every AI agent action is aligned with industry-leading standards, giving sustainability leaders confidence that innovation never comes at the expense of data integrity or compliance.

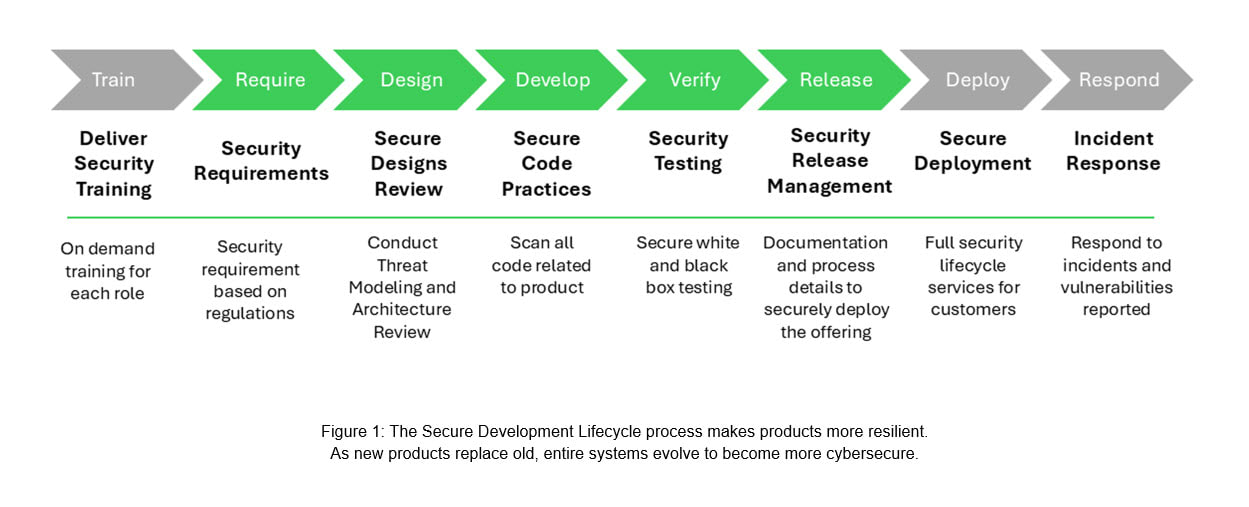

- Secure Development Lifecycle (SDL) integrated into Development Operations

Our process applies the Secure Development Lifecycle (SDL) by embedding security checks, privacy assessments for every product, and risk modeling into every stage of software development, from design to deployment. Integrated with DevOps, this means continuous code scanning, penetration testing, and automated vulnerability scanning using tools like GitHub, Coverity, and Black Duck—ensuring that Agentic AI is built on a foundation of security and compliance from day one.

Schneider Electric’s “Cybersecurity by Design” whitepaper states, “The Secure Development Lifecycle process makes products more resilient. As new products replace old, entire systems evolve to become more cybersecure.” In the graph below, you can see the progression from training, development, to incident response.

The SDL process means that every decision made by Schneider Electric’s Agentic AI is auditable. If an ESG indicator response appears, we can trace it back to the source document and reasoning step. The system is designed to be transparent, showing each decision made by the AI. If a statement is made (e.g., “safety incidents reduced by 83%”), it should be backed by actual data or documentation, with citations and traceability. Our clients want AI-driven software that is trustworthy. If a decision is being made in the platform, we can audit the process asking, “why was it made?” and “why did it phrase a response the way it did?”

As Jeff Willert, Director of Data Science, reminds us: “These AI tools… they’re not almighty… they’re still very much constrained.”

Educated for Automation: How Private is User Data?

One of the most common questions we hear: “What data was used to train the model?”

Our answer: no client data. We made a deliberate choice not to train LLMs on client data. Why? Because fine-tuning on proprietary data risks leakage across clients. Instead, we use dynamic retrieval in which agents retrieve external information when needed, within strict permissions.

We train our AI using synthetic data, meaning artificially generated data that mimics the patterns and structures of real-world data. It does not contain any actual proprietary information or personal data. This approach is compliant with GDPR, preserving confidentiality, and avoiding bias from any sensitive datasets.

“Agents don’t roam freely. They are intentionally scoped to inherit all the platform’s built-in security controls — including identity, context, permissions, and data access restrictions. This ensures every agent acts only within the same boundaries and privileges as the authenticated user it represents.”

- Kevin Price

Mitigating Risks of AI Usage

Risk management is multi-layered and practiced continuously:

- Rigorous Testing

We validate inputs and outputs, ensuring accuracy and reasoning integrity. Jeff Willert describes the testing process, which involves building ground truth datasets with known inputs and outputs. Agents are tested against these to ensure accuracy. He explains that agents often perform multi-step reasoning, and each step is validated. Jeff says, “Even if it’s giving you the right answer but for the wrong reasons, that’s concerning.” If an agent cannot reliably answer a question, it is instructed to say “I can’t answer that” rather than risk giving incorrect information.

- Defense in Depth

Azure’s LLM guardrails prevent harmful or irrelevant content. Internal filters block off-topic queries, like “spaghetti sauce recipes,” for example. Sentiment and adversarial behavior checks add another layer.

- Constrained Agents

“Constrained Agents” strategy is central to our AI safety. Agents reject malformed prompts and operate within narrow parameters. For example, an AI tool that recommends renewable energy procurement options but is restricted to verified, low-carbon sources cannot suggest fossil-fuel-based solutions. Before launching, penetration testing precedes every release.

- Continuous Updates

Large Language Models (LLMs) evolve rapidly, becoming smarter and more efficient. We adapt through agile development, ensuring customers always benefit from cutting-edge performance without compromising safety. Frugal, or sustainable, AI works similarly as models are being adapted and updated to be more efficient using fewer resources.

- AI Risk Assessments

Each AI solution undergoes an assessment to review the adherence to EU AI Act, Responsible AI Review, threat modeling, and code scanning. Our experts review and have access to each assessment and are active in establishing best practices.

Looking Ahead: Governance in a Rapidly Changing Landscape

AI governance isn’t static. We continuously improve through audits, customer feedback, and quarterly transparency reviews.

In order to make improvements, we must have clear insights. This means that every agent interaction is logged, including every tool call, and every reasoning step. If there are errors, we know exactly where to go back and fix them. Ironically, this makes AI more transparent than human decision-making, where assumptions and reasoning often go undocumented.

Mistakes happen, whether by humans or AI. The goal isn’t blame; it’s accountability. Schneider Electric asks the question, “How can we create a trail of what we are doing that we can always refer back to when necessary?” By creating a clear audit trail, we build resilience and trust.

Conclusion: Responsible AI as a Catalyst for Decarbonization

Privacy by design is more than just a compliance checkbox for Schneider Electric. We prioritize it as our strategic enabler. By embedding governance into all AI Agents, sustainability leaders can harness AI confidently, accelerating progress toward their sustainability goals.

Ready to learn more? Explore how our AI-powered solutions can support your decarbonization journey. Request a free demo of our AI software solution today.