Efficiency Squared: Balancing the Power of AI and Responsibility

As development of Artificial Intelligence systems accelerate at an unprecedented pace, a critical question emerges: How do we harness its power responsibly?

“True innovation isn't measured just by compute power but by human impact. The most powerful AI in the future will be the one that's efficient enough to scale, inclusive enough to serve, and ethical enough to endure. Frugal AI redefines progress by asking how we can design intelligence that is open, accessible, and truly in service of all.” — University of Cambridge

This quote captures the heart of a growing movement, one that’s pushing us to rethink what progress means as agentic AI continues to evolve at an incredible pace.

New agentic AI models are now capable of autonomously generating workflows and adapting strategies in real time, a leap that took traditional AI systems years to achieve. In just the past 12 months, enterprise platforms have gone from reactive automation to predictive, self-directed decision-making, reshaping how supply chains operate at scale. This rapid evolution is forcing companies to rethink their digital strategies almost quarterly to stay competitive.

New agentic AI models are now capable of autonomously generating workflows and adapting strategies in real time, a leap that took traditional AI systems years to achieve. In just the past 12 months, enterprise platforms have gone from reactive automation to predictive, self-directed decision-making, reshaping how supply chains operate at scale. This rapid evolution is forcing companies to rethink their digital strategies almost quarterly to stay competitive.

As organizations race to deploy autonomous agents that can transform data quality and tackle global sustainability challenges, three words rise to the surface: efficient, inclusive, and ethical.

These words present both a challenge and an opportunity.

While AI holds immense potential, it also comes with a cost. A cost measured not only in currency, but in energy, emissions, and equity. The question is no longer can we build powerful AI systems, but should we build them the way we always have?

To answer that, we must start with frugal AI: intelligence built to do more with less.

The Challenge: AI’s Energy Appetite

Andrew Winston of Winston Eco-Strategies recently shared in an interview with Schneider Electric, “There is a misconception that digital is ‘light,’ but that’s the problem. There is massive energy usage, heat, and servers—the physical footprint is much larger than people realize. If you're not intentional, it can get very expensive.”

Agentic AI, like all forms of AI, relies on data centers to meet its high computational demands. These centers provide scalability, cloud-based deployment, and security, but they also come with a steep energy bill:

- AI-specific demand is projected to reach 44 gigawatts (GW) in 2025, out of a total 82 GW for all data center workloads.

- Global data center electricity use is expected to more than double, from 460 terawatt-hours (TWh) in 2022 to over 1,000 TWh by 2026, largely driven by AI.

- A single five-acre AI data center equipped with GPUs can consume up to 50 megawatts (MW), a tenfold increase over traditional CPU-based centers.

Frugal AI

To better understand Frugal AI at Schneider Electric, we spoke with Jeff Willert, a Director of Data Science at Schneider Electric.

.

.

As a technology leader of the world’s #1 leader in sustainability, there are questions that Jeff often considers when building AI solutions:

- Do we need AI Agents to solve all our problems?

- Do we need to build a model for every business need?

Quoting Steve Wilhite, Executive Vice President of the Sustainability Business, Jeff said, “Agents are the new shiny hammer, but not everything is a nail.”

Impact Considerations When Designing Agentic AI

It’s important to begin with an understanding of the pressures faced by all companies to expand and innovate with intelligent technology. Some of which include:

- Economic Pressure to innovate and integrate AI at different speeds. AI agents have capabilities to scale with very minimal marginal costs compared to human workers. In addition, they operate 24/7 and maintain consistent quality. Companies undoubtedly feel the pressure to adopt AI and remain competitive in their respective spaces. When it comes to AI agents, most emissions come from frequent use (inference), not the one-time training process. While training consumes a lot of energy at once, the small energy cost of each inference adds up quickly with large-scale daily use. This is something that companies should keep in mind.

- Environmental Pressure to protect the climate is felt by many, specifically challenges with water usage. Large volumes of water are required to cool servers in data centers. According to the World Economic Forum, GPT-3 is estimated to use about one 16-ounce bottle of water for every 10-50 responses with a projected annual water withdrawal reaching 6.6 billion cubic meters by 2027.

- Resource Pressure is felt by many small businesses or emerging markets as they have a hard time getting into the game (larger models are more efficient and more expensive). AI agents require a significant investment into infrastructure, ongoing maintenance and licensing, creating a barrier for those without robust technology capabilities.

Starting with Efficient Architecture

Keeping these pressures in mind, Schneider Electric believes that successful and sustainable agents start at the same place, with efficient architecture, which is built into the strategy from day one. Carlos Ribadeneira, a leader in AI-driven data operations, and member of Jeff Willert’s Data Science team, asks, “How do we innovate without breaking the bank or the planet?” The answer, he explained, is that “every model we build is designed from the ground up with efficiency.”

But before the building begins, Schneider Electric embraces a sustainable mindset.

Dan Whitsell, Chief Technology Officer for Schneider Electric’s Sustainability Business, explains how a sustainable mindset harnesses the power of responsible and ethical AI to best serve the people and the planet, reducing harmful impact.

.

.

Building with a Sustainability Mindset

Frugal AI is more than just a strategy; it’s a mindset that drives success. Carlos explains further, “It’s a principle, not just something we advocate for. We make a conscious effort in every project to be frugal.”

With Jeff’s leadership, Schneider Electric consistently evaluates what they are doing and how they are doing it. Jeff emphasizes that in many cases, AI or generative AI may not be necessary, so the focus is to identify the best, and most cost-effective solution.

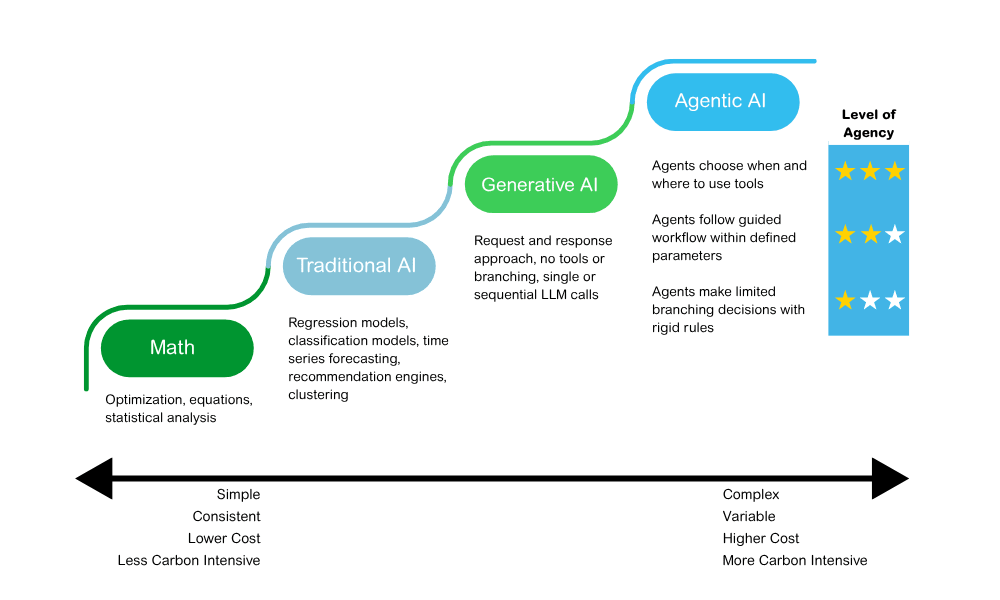

Choosing the Right AI Model: Four Tiers with Unique Applications

Model selection is crucial in managing carbon output. The goal is to find which model will perform the best without using more computation power than necessary. The graphic below shows a variety of solution options that can be applied to many problems.

Math: The first tier shows the most basic software development and applied math with linear regression. Think of a math equation, “if you want X, then do Y.”

Many problems we encounter don't require AI to deliver value to the customer. Some solutions can be addressed through traditional software development, while others might require mathematics, statistics, or optimization algorithms. If we can solve a problem with these techniques, they often yield the most efficient solution.

Traditional AI: The second tier includes artificial intelligence and machine learning through classification algorithms, recommendation engines, and more. These algorithms can vary in complexity, but all demonstrate the ability to “learn” based on a set of training data. While data scientists may provide a framework for the algorithm, the actual “weights” or “parameters” are learned by experiencing the training data. While these models can be quite complicated, in most cases, their complexity pales in comparison to tools in the Generative AI space.

Generative AI: The third tier consists of models like ChatGPT, Claude, Perplexity, and other emerging AI systems. Generative AI refers to AI systems that can create new content, such as images, text, or even music. Unlike traditional AI, which focuses on analyzing and predicting based on existing data, generative AI can produce new content. Generative AI models work by learning patterns from massive datasets and then generating new, similar content with similar patterns experienced in training. As the number of parameters increases, more capability or intelligence is often observed, but this comes at the cost of increased power consumption and a bigger carbon footprint.

Agentic AI: The fourth and most powerful system is the agentic model. Agentic systems leverage a series of large language models (LLMs) invocations, one after another, as the agent works to accomplish its task, refining its plan along the way. These models hold a vast amount of power and are designed to perform tasks on behalf of the user. However, their complexity also contributes to increased carbon emissions. Large Language Models are often viewed as black-boxes – meaning we can’t necessarily observe why specific tokens are generated. Agents rely on these LLMs for reasoning and tool-calling, but they become slightly more interpretable as we can store and review their “thought processes” and “reasoning steps”. This visibility helps refine agent behavior when we see suboptimal outcomes.

So, what is the best approach? The answer, Jeff says, is trying to push things as low in the spectrum as best we can. This typically means a more interpretable system that’s easier to maintain, runs quickly, and has a lower carbon footprint.

It’s also important to keep in mind that there are a variety of frontier LLMs, both closed and open-source, including top names like OpenAI’s GPT-family, Anthropic Claude models, Google’s Gemini, Meta’s Llama series, and more. These models often excel in different areas, and often the “most powerful” model isn’t required to derive value for the client. We can mix-and-match our choice of LLMs even within a multi-agent system or across agentic tool calls to benefit from smaller, more energy efficient models.

Schneider Electric’s job is to find the right model for every project in the spectrum, from traditional to generative to agentic.

Innovating Responsibly: Three Rules for Frugal AI

To manage the energy intensity of AI implementation, Schneider Electric follows three core principles:

- Right-Size Every Model

Because not every task needs a massive model, Schneider Electric focuses on selecting the smallest model that meets performance requirements.

- Smaller models may not have all the capabilities of their larger peers, but when they meet our performance requirements, they’re preferred. And the good news is that the cost of LLM inference is rapidly declining, often driven by smaller models packed with more intelligence. “LLMflation,” a phrase coined by Andreessen Horowitz says, “The price decline in LLMs is even faster than that of compute cost during the PC revolution or bandwidth during the dotcom boom: For an LLM of equivalent performance, the cost is decreasing by 10x every year.” While cost isn’t our top priority, prices are typically correlated with the size of the model and the energy required to serve it.

- Token efficiency is key. The generation of tokens, units of text processed by LLMs, creates emissions. Reducing token usage can significantly cut both cost and carbon footprint. Once we’ve built agents or LLM-based systems and are satisfied with performance, we can begin the optimization process. This involves minimizing the number of LLM invocations and pruning inputs to limit prompt sizes.

- Exploring and reviewing benchmarking ensures that every model we build is highly flexible. This practice ensures the Schneider Electric data science team is ready to pivot when new developments arise. We experiment daily, analyze options for improving carbon efficiency, and monitor the latest performance benchmarks.

Example:

Schneider Electric built an invoice registration agent to match incoming invoices to accounts in a database, previously a manual task. Initially, ChatGPT OpenAI's GPT-4 was used for its accuracy; however, the solution was later optimized to use half the number of LLM calls without sacrificing performance. If many calls aren’t needed, they aren’t used, therefore saving energy.

Sometimes, traditional models are the better choice, and larger models aren’t deployed simply because they’re available.

2. Deploy Workloads Where Carbon Is Lower

Schneider Electric also considers where models are deployed. Grid efficiency can vary by multiple orders of magnitude across the globe and simply positioning workloads in the right geographies can have a massive impact.

For example, manufacturers using Agentic AI to optimize production schedules or to predict equipment failures can now run these models in data centers powered by clean energy. By choosing locations with greener grids, such as those using hydro or wind power, companies can reduce the carbon footprint of their digital operations and gain efficiency.

Schneider Electric is proving Frugal AI and Sustainable AI go hand in hand, and location matters.

3. Build Safety That Drives Efficiency

Security and sustainability are not mutually exclusive. Every chat interface is built to allow users to query data safely and efficiently.

- Safeguards are in place to prevent adversarial use and ensure conversations stay focused on energy and sustainability.

- Retrieval-Augmented Generation (RAG) incorporates information into prompts and agentic workflows in real-time, reducing the need for training or fine-tuning models. With RAG or agentic systems, we can make API calls to collect data or other resources from our data stores, improving accuracy and reducing risk.

In the financial industry, for example, firms would use a secure chat interface powered by RAG to let analysts query sensitive portfolio data without exposing it to the AI model ensuring client information is protected.

The LLM itself isn’t asked to memorize everything it needs to know, which is a safety and sustainability feature by design. It helps build user trust, keeps the system efficient, and reassures users that the answers they receive are accurate and secure.

Sustainability ROI Multipliers

Agentic AI systems are designed to operate autonomously and achieve specific goals, often requiring continuous inference and decision making. Without a frugal design and efficient architecture from the start, these systems can quickly become bloated, consuming excessive resources and driving up costs. However, when built with efficiency in mind, Frugal AI delivers tangible, long-term value.

By prioritizing frugality from the start, organizations can:

- Reduce lifecycle emissions across the AI system’s lifespan

- Improve maintainability and adaptability, making systems easier to update and scale

- Enable real-time decision making on low-power devices, expanding accessibility and use cases

Being frugal in AI system design isn’t just about being green; it’s about being smart. Every watt saved, every millisecond shaved off inference time, and every dollar not spent on unnecessary compute contributes to higher profit margins, faster innovation cycles, stronger brand equity, and lower risk exposure.

A recent study from Harvard Law found that 76% of companies pursue sustainability ROI to strengthen their overall sustainability narrative. Building agentic AI models with frugality in mind is yet another opportunity to position businesses for growth that extends far beyond the bottom line.

Conclusion

Power and responsibility can coexist and produce ground-breaking results. When AI agents are built ethically, intentionally, and sustainably they can deliver transformative results.

At Schneider Electric, we believe that the future of AI must be efficient, inclusive, and ethical. That’s why we’re embedding frugal AI into everything we do, because innovation should serve both people and the planet.

Read more about Schneider Electric’s platform to accelerate supply chain decarbonization.